Hello People,

Today, i will be writing about the issue we had on one of our Sitecore app which was hosted on azure, Everything was working fine until fine morning, Our CD site started giving "502 Bad Gateway" error page.

With my surprise, i hit the refresh button 4-5 times to double check if its really down or what? but yes it was down and had no clue, we did not have any deployments, we did not deploy anything, and why it just stopped working?

Problem

CD Sites started giving 502 bad gateway out of the blue and suddenly

Troubleshooting & Solution

To resolve this issue, we did everything possible, but very first thing i did was to blame azure infrastructure or the plan under which the app was running, Because we did not deploy anything, no new code binaries, so why it stopped, so i got on a call with our DevOps team and started discussing the possibilities from infrastructure point of view, and we did following steps together until the issue got resolved

1) We started digging into the logs, and there were random exceptions present and i had no idea why these exceptions are coming and from where, Also i was not concerned with those exceptions because here the APP was not responding at all, so i never thought that due to these exception app is down, and It can never be

2) I compared this azure app log with other environment which was also on azure (production environment), and i did not find those exceptions, which was little surprising for me, We had couple exceptions like below, For which i had no clue

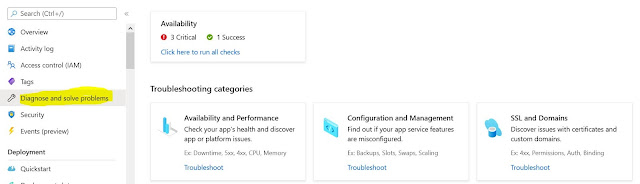

3) We started looking into that specific CD app's azure options to "Diagnose & Solve problem" option and in that "Availability & Performance"

We found out the almost all the request to the app was failing and platform availability was 100% but app's availability was down to 0-5%, almost all the requests to the app were turning into 502 bad gateway, Technically app was totally down and was not able to serve anything.

Now we got the idea that, There is something wrong with behind the scene and not the azure infra structure issue

3) To know more about the nature of the exceptions and dependencies and call telemetry, I got into "Application Insights" of the associated app, which showed us that there are repetitive exceptions, and which also made us feel that, Traffic is definitely coming onto app but because of these exceptions, Something is going wrong and app is making the gateway as "Bad gateway"

4) Next we look into the configuration of the app gateway, where it was configured that if the app is not able to come online in specific time, then azure will treat it as a bad gateway, Due to these exceptions, It could be possible that app is making some calls and those are not responding in time and hence gateway is timing out.

It looked logical to us, Below was we observed in "Application Insight"

There was a big count of similar dependencies exception, We did correct those configuration to get rid of those exceptions, we were fine and exception count was reduced but still app did not came up and it was still giving the same "502 Bad Gateway"

5) We observed that the APP was in S1 plan of azure, we decided to scale up the app to S3 plan, to get some more memory and see if that works, and with our surprise the app was working, "Bad Gateway" was gone but still the app performance was very poor, So we concluded that, there is something wrong with the memory, because the moment we increased the memory, app worked

Now it was time to find out what is going wrong with the memory, and we also had seen Memory related exception in our previous azure app diagnosis.

5) We decided to create the memory dump and see in the time of crash what might have gone wrong to find out more info, also we raise a ticket with MSFT and Sitecore too so we get more insights

The memory dump revealed some important information

Memory Dump Analysis

With our luck, Sitecore also pointed out in the same direction of memory and following were the observations

In memory dump, Garbage Collector was running. 2 long running threads about ContentSearch indexing was waiting for GC to complete.

There were about 2.7 GB of memory allocated for System.String. These strings were being used by Event Queue.

Additionally, one of our item where we are storing big XML string was having a huge 6MB string in a field. This was then multiplied by EventQueue, jobs etc and had easily taken 700+ MB of memory.

So next thing was to see the eventqueue, and we had around about 300K entries and it was confirmed that, this could be the issue of why app was working fine before and why it stopped suddenly, eventqueue must have grown significantly by that time

So we cleared the eventqueue table from core, master, web DB

There were couple of other Findings too, Which are following

Our your CD had "sitecore_web_index" using "onPublishEndAsyncSingleInstance" strategy.

this is the default configuration. However in a scaled

environment, we should only let 1 instance, e.g. CM perform this

indexing strategy.

An improvement was made since Sitecore 9.2 with the addition of a new Indexing sub-role. This allows user to combine them with the desired ContentManagement role.

Regardless

of how many CM/CD you have, only 1 CM is supposed to perform indexing

operations, other instance should have this strategy set to "manual".

So we changed our CD configs to have following configurations

<strategy ref="contentSearch/indexConfigurations/indexUpdateStrategies/manual" />

Now, After doing these changes, we restarted our CD instances and hit the site and voila, Instead of seeing "Bad gateway", we were seeing our site and all gateway turned "Healthy" from "Faulty"

Solution

Because eventqueue entries were large and it was getting multiplied with those huge sitecore items, and due to that memory was getting fuller and site was taking time to come up and it was greater than defined gateway time out period and hence it was giving "Bad Gateway" error

1) Delete eventqueue table entries, and configure Sitecore to clean up eventqueue so that in future also it can only have limited number of records, Sitecore recommends 1000 records for eventqueue for recommended performance

2) Always have CM role to perform indexing operations on CDs, so change all CDs indexing strategy to manual and keep only CM to perform indexing operations.

After this change we also scale down the app back to S1 and still everything is working fine now, Hope this helps someone facing the same issue

Comments

Post a Comment